2023 - 2024

I came back to

OpenAI where I built a new team working on midtraining and synthetic data generation.

2015 - 2017

I was a research scientist and a founding member at

OpenAI.

2011 - 2015

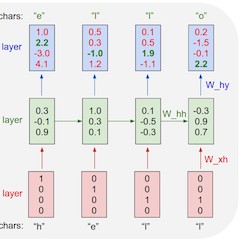

My PhD was focused on convolutional/recurrent neural networks and their applications in computer vision, natural language processing and their intersection. My adviser was

Fei-Fei Li at the Stanford Vision Lab and I also had the pleasure to work with

Daphne Koller,

Andrew Ng,

Sebastian Thrun and

Vladlen Koltun along the way during the first year rotation program.

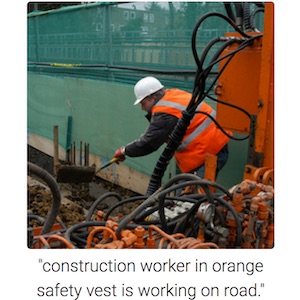

I designed and was the primary instructor for the first deep learning class Stanford -

CS 231n: Convolutional Neural Networks for Visual Recognition. The class became one of the largest at Stanford and has grown from 150 enrolled in 2015 to 330 students in 2016, and 750 students in 2017.

Along the way I squeezed in 3 internships at (baby) Google Brain in 2011 working on learning-scale unsupervised learning from videos, then again in Google Research in 2013 working on large-scale supervised learning on YouTube videos, and finally at DeepMind in 2015 working on the deep reinforcement learning team with

Koray Kavukcuoglu and

Vlad Mnih.

2009 - 2011

MSc at the University of British Columbia where I worked with

Michiel van de Panne on learning controllers for physically-simulated figures (i.e., machine-learning for agile robotics but in a physical simulation).

2005 - 2009

BSc at the University of Toronto with a double major in computer science and physics and a minor in math. This is where I first got into deep learning, attending

Geoff Hinton's class and reading groups.